The post How do we configure PostgreSQL for performance ? appeared first on The WebScale Database Infrastructure Operations Experts.

]]>We work for some of the largest PostgreSQL database infrastructure globally, Often we get query on how to configure PostgreSQL for performance, scalability and reliability so thought will write a post on configuring PostgreSQL for performance. PostgreSQL is a highly tunable database system but setting wrong values for PostgreSQL configuration parameters will negatively impact your overall PostgreSQL performance. Setting too high values won’t be always good for PostgreSQL performance so we strongly recommend to understand the sizing of configuration parameters before changing their default values. Please don’t consider this post a generic PostgreSQL configuration run-book or recommendations for your production infrastructure, You can definitely use this as a checklist for configuring your PostgreSQL infrastructure, This post covers tuning PostgreSQL configuration parameters like max_connections, shared_buffers, effective_cache_size, work_mem, maintenance_work_mem, seq_page_cost, random_page_cost, wal_buffers, autovacuum etc. Below we have explained how this post / presentation is organized:

- Configuring PostgreSQL connection handling for performance

- How to tune PostgreSQL memory parameters for performance and scalability ?

- Tuning PostgreSQL optimizer for performance and efficient indexing

- Configuring PostgreSQL WAL files for performance

- Tuning PostgreSQL writer process

- Tuning PostgreSQL checkpointing

- Tuning PostgreSQL autovacuum

- Troubleshooting PostgreSQL performance with logs

Download our featured whitepaper on configuring PostgreSQL for performance. If you’re a PostgreSQL DBA or an technology executive looking to improve performance and prepare for high traffic PostgreSQL infrastructure, this is a must-read!

☛ MinervaDB is trusted by top companies worldwide

The post How do we configure PostgreSQL for performance ? appeared first on The WebScale Database Infrastructure Operations Experts.

]]>The post PostgreSQL DBA Daily Checklist appeared first on The WebScale Database Infrastructure Operations Experts.

]]>We often get this question, What are the most important things a PostgreSQL DBA should do to guarantee optimal performance and reliability, Do we have checklist for PostgreSQL DBAs to follow daily ? Since we are getting this question too often, Thought let’s note it as blog post and share with community of PostgreSQL ecosystem. The only objective this post is to share the information, Please don’t consider this as a run-book or recommendation from MinervaDB PostgreSQL support. We at MinervaDB are not accountable of any negative performance in you PostgreSQL performance with running these scripts in production database infrastructure of your business, The following is a simple daily checklist for PostgreSQL DBA:

Task 1: Check that all the PostgreSQL instances are up and operational:

pgrep -u postgres -fa -- -D

What if you have several instances of PostgreSQL are running:

pgrep -fa -- -D |grep postgres

Task 2: Monitoring PostgreSQL logsRecord PostgreSQL error logs: Open postgresql.conf configuration file, Under the ERROR REPORTING AND LOGGING section of the file, use following config parameters:

log_destination = 'stderr' logging_collector = on log_directory = 'pg_log' log_filename = 'postgresql-%Y-%m-%d_%H%M%S.log' log_truncate_on_rotation = off log_rotation_age = 1d log_min_duration_statement = 0 log_connections = on log_duration = on log_hostname = on log_timezone = 'UTC'

Save the postgresql.conf file and restart the postgres server.

sudo service postgresql restart

Task 3: Confirm PostgreSQL backup completed successfully

Use backup logs (possible only with PostgreSQL logical backup) to audit backup quality:

$ pg_dumpall > /backup-path/pg-backup-dump.sql > /var/log/postgres/pg-backup-dump.log

Task 4: Monitoring PostgreSQL Database Size:

select datname, pg_size_pretty(pg_database_size(datname)) from pg_database order by pg_database_size(datname);

Task 5: Monitor all PostgreSQL queries running (please repeat this task every 90 minutes during business / peak hours):

SELECT pid, age(clock_timestamp(), query_start), usename, query FROM pg_stat_activity WHERE query != '<IDLE>' AND query NOT ILIKE '%pg_stat_activity%' ORDER BY query_start desc;

Task 6: Inventory of indexes in PostgreSQL database:

select

t.relname as table_name,

i.relname as index_name,

string_agg(a.attname, ',') as column_name

from

pg_class t,

pg_class i,

pg_index ix,

pg_attribute a

where

t.oid = ix.indrelid

and i.oid = ix.indexrelid

and a.attrelid = t.oid

and a.attnum = ANY(ix.indkey)

and t.relkind = 'r'

and t.relname not like 'pg_%'

group by

t.relname,

i.relname

order by

t.relname,

i.relname;

Task 7: Finding the largest databases in your PostgreSQL cluster

SELECT d.datname as Name, pg_catalog.pg_get_userbyid(d.datdba) as Owner,

CASE WHEN pg_catalog.has_database_privilege(d.datname, 'CONNECT')

THEN pg_catalog.pg_size_pretty(pg_catalog.pg_database_size(d.datname))

ELSE 'No Access'

END as Size

FROM pg_catalog.pg_database d

order by

CASE WHEN pg_catalog.has_database_privilege(d.datname, 'CONNECT')

THEN pg_catalog.pg_database_size(d.datname)

ELSE NULL

END desc -- nulls first

LIMIT 20

Task 8: when you are suspecting some serious performance bottleneck in PostgreSQL ? Especially when you suspecting transactions blocking each other:

WITH RECURSIVE l AS (

SELECT pid, locktype, mode, granted,

ROW(locktype,database,relation,page,tuple,virtualxid,transactionid,classid,objid,objsubid) obj

FROM pg_locks

), pairs AS (

SELECT w.pid waiter, l.pid locker, l.obj, l.mode

FROM l w

JOIN l ON l.obj IS NOT DISTINCT FROM w.obj AND l.locktype=w.locktype AND NOT l.pid=w.pid AND l.granted

WHERE NOT w.granted

), tree AS (

SELECT l.locker pid, l.locker root, NULL::record obj, NULL AS mode, 0 lvl, locker::text path, array_agg(l.locker) OVER () all_pids

FROM ( SELECT DISTINCT locker FROM pairs l WHERE NOT EXISTS (SELECT 1 FROM pairs WHERE waiter=l.locker) ) l

UNION ALL

SELECT w.waiter pid, tree.root, w.obj, w.mode, tree.lvl+1, tree.path||'.'||w.waiter, all_pids || array_agg(w.waiter) OVER ()

FROM tree JOIN pairs w ON tree.pid=w.locker AND NOT w.waiter = ANY ( all_pids )

)

SELECT (clock_timestamp() - a.xact_start)::interval(3) AS ts_age,

replace(a.state, 'idle in transaction', 'idletx') state,

(clock_timestamp() - state_change)::interval(3) AS change_age,

a.datname,tree.pid,a.usename,a.client_addr,lvl,

(SELECT count(*) FROM tree p WHERE p.path ~ ('^'||tree.path) AND NOT p.path=tree.path) blocked,

repeat(' .', lvl)||' '||left(regexp_replace(query, 's+', ' ', 'g'),100) query

FROM tree

JOIN pg_stat_activity a USING (pid)

ORDER BY path;

Task 9: Identify bloated Tables in PostgreSQL :

WITH constants AS (

-- define some constants for sizes of things

-- for reference down the query and easy maintenance

SELECT current_setting('block_size')::numeric AS bs, 23 AS hdr, 8 AS ma

),

no_stats AS (

-- screen out table who have attributes

-- which dont have stats, such as JSON

SELECT table_schema, table_name,

n_live_tup::numeric as est_rows,

pg_table_size(relid)::numeric as table_size

FROM information_schema.columns

JOIN pg_stat_user_tables as psut

ON table_schema = psut.schemaname

AND table_name = psut.relname

LEFT OUTER JOIN pg_stats

ON table_schema = pg_stats.schemaname

AND table_name = pg_stats.tablename

AND column_name = attname

WHERE attname IS NULL

AND table_schema NOT IN ('pg_catalog', 'information_schema')

GROUP BY table_schema, table_name, relid, n_live_tup

),

null_headers AS (

-- calculate null header sizes

-- omitting tables which dont have complete stats

-- and attributes which aren't visible

SELECT

hdr+1+(sum(case when null_frac <> 0 THEN 1 else 0 END)/8) as nullhdr,

SUM((1-null_frac)*avg_width) as datawidth,

MAX(null_frac) as maxfracsum,

schemaname,

tablename,

hdr, ma, bs

FROM pg_stats CROSS JOIN constants

LEFT OUTER JOIN no_stats

ON schemaname = no_stats.table_schema

AND tablename = no_stats.table_name

WHERE schemaname NOT IN ('pg_catalog', 'information_schema')

AND no_stats.table_name IS NULL

AND EXISTS ( SELECT 1

FROM information_schema.columns

WHERE schemaname = columns.table_schema

AND tablename = columns.table_name )

GROUP BY schemaname, tablename, hdr, ma, bs

),

data_headers AS (

-- estimate header and row size

SELECT

ma, bs, hdr, schemaname, tablename,

(datawidth+(hdr+ma-(case when hdr%ma=0 THEN ma ELSE hdr%ma END)))::numeric AS datahdr,

(maxfracsum*(nullhdr+ma-(case when nullhdr%ma=0 THEN ma ELSE nullhdr%ma END))) AS nullhdr2

FROM null_headers

),

table_estimates AS (

-- make estimates of how large the table should be

-- based on row and page size

SELECT schemaname, tablename, bs,

reltuples::numeric as est_rows, relpages * bs as table_bytes,

CEIL((reltuples*

(datahdr + nullhdr2 + 4 + ma -

(CASE WHEN datahdr%ma=0

THEN ma ELSE datahdr%ma END)

)/(bs-20))) * bs AS expected_bytes,

reltoastrelid

FROM data_headers

JOIN pg_class ON tablename = relname

JOIN pg_namespace ON relnamespace = pg_namespace.oid

AND schemaname = nspname

WHERE pg_class.relkind = 'r'

),

estimates_with_toast AS (

-- add in estimated TOAST table sizes

-- estimate based on 4 toast tuples per page because we dont have

-- anything better. also append the no_data tables

SELECT schemaname, tablename,

TRUE as can_estimate,

est_rows,

table_bytes + ( coalesce(toast.relpages, 0) * bs ) as table_bytes,

expected_bytes + ( ceil( coalesce(toast.reltuples, 0) / 4 ) * bs ) as expected_bytes

FROM table_estimates LEFT OUTER JOIN pg_class as toast

ON table_estimates.reltoastrelid = toast.oid

AND toast.relkind = 't'

),

table_estimates_plus AS (

-- add some extra metadata to the table data

-- and calculations to be reused

-- including whether we cant estimate it

-- or whether we think it might be compressed

SELECT current_database() as databasename,

schemaname, tablename, can_estimate,

est_rows,

CASE WHEN table_bytes > 0

THEN table_bytes::NUMERIC

ELSE NULL::NUMERIC END

AS table_bytes,

CASE WHEN expected_bytes > 0

THEN expected_bytes::NUMERIC

ELSE NULL::NUMERIC END

AS expected_bytes,

CASE WHEN expected_bytes > 0 AND table_bytes > 0

AND expected_bytes <= table_bytes

THEN (table_bytes - expected_bytes)::NUMERIC

ELSE 0::NUMERIC END AS bloat_bytes

FROM estimates_with_toast

UNION ALL

SELECT current_database() as databasename,

table_schema, table_name, FALSE,

est_rows, table_size,

NULL::NUMERIC, NULL::NUMERIC

FROM no_stats

),

bloat_data AS (

-- do final math calculations and formatting

select current_database() as databasename,

schemaname, tablename, can_estimate,

table_bytes, round(table_bytes/(1024^2)::NUMERIC,3) as table_mb,

expected_bytes, round(expected_bytes/(1024^2)::NUMERIC,3) as expected_mb,

round(bloat_bytes*100/table_bytes) as pct_bloat,

round(bloat_bytes/(1024::NUMERIC^2),2) as mb_bloat,

table_bytes, expected_bytes, est_rows

FROM table_estimates_plus

)

-- filter output for bloated tables

SELECT databasename, schemaname, tablename,

can_estimate,

est_rows,

pct_bloat, mb_bloat,

table_mb

FROM bloat_data

-- this where clause defines which tables actually appear

-- in the bloat chart

-- example below filters for tables which are either 50%

-- bloated and more than 20mb in size, or more than 25%

-- bloated and more than 4GB in size

WHERE ( pct_bloat >= 50 AND mb_bloat >= 10 )

OR ( pct_bloat >= 25 AND mb_bloat >= 1000 )

ORDER BY pct_bloat DESC;

Task 10: Identify bloated indexes in PostgreSQL :

-- btree index stats query

-- estimates bloat for btree indexes

WITH btree_index_atts AS (

SELECT nspname,

indexclass.relname as index_name,

indexclass.reltuples,

indexclass.relpages,

indrelid, indexrelid,

indexclass.relam,

tableclass.relname as tablename,

regexp_split_to_table(indkey::text, ' ')::smallint AS attnum,

indexrelid as index_oid

FROM pg_index

JOIN pg_class AS indexclass ON pg_index.indexrelid = indexclass.oid

JOIN pg_class AS tableclass ON pg_index.indrelid = tableclass.oid

JOIN pg_namespace ON pg_namespace.oid = indexclass.relnamespace

JOIN pg_am ON indexclass.relam = pg_am.oid

WHERE pg_am.amname = 'btree' and indexclass.relpages > 0

AND nspname NOT IN ('pg_catalog','information_schema')

),

index_item_sizes AS (

SELECT

ind_atts.nspname, ind_atts.index_name,

ind_atts.reltuples, ind_atts.relpages, ind_atts.relam,

indrelid AS table_oid, index_oid,

current_setting('block_size')::numeric AS bs,

8 AS maxalign,

24 AS pagehdr,

CASE WHEN max(coalesce(pg_stats.null_frac,0)) = 0

THEN 2

ELSE 6

END AS index_tuple_hdr,

sum( (1-coalesce(pg_stats.null_frac, 0)) * coalesce(pg_stats.avg_width, 1024) ) AS nulldatawidth

FROM pg_attribute

JOIN btree_index_atts AS ind_atts ON pg_attribute.attrelid = ind_atts.indexrelid AND pg_attribute.attnum = ind_atts.attnum

JOIN pg_stats ON pg_stats.schemaname = ind_atts.nspname

-- stats for regular index columns

AND ( (pg_stats.tablename = ind_atts.tablename AND pg_stats.attname = pg_catalog.pg_get_indexdef(pg_attribute.attrelid, pg_attribute.attnum, TRUE))

-- stats for functional indexes

OR (pg_stats.tablename = ind_atts.index_name AND pg_stats.attname = pg_attribute.attname))

WHERE pg_attribute.attnum > 0

GROUP BY 1, 2, 3, 4, 5, 6, 7, 8, 9

),

index_aligned_est AS (

SELECT maxalign, bs, nspname, index_name, reltuples,

relpages, relam, table_oid, index_oid,

coalesce (

ceil (

reltuples * ( 6

+ maxalign

- CASE

WHEN index_tuple_hdr%maxalign = 0 THEN maxalign

ELSE index_tuple_hdr%maxalign

END

+ nulldatawidth

+ maxalign

- CASE /* Add padding to the data to align on MAXALIGN */

WHEN nulldatawidth::integer%maxalign = 0 THEN maxalign

ELSE nulldatawidth::integer%maxalign

END

)::numeric

/ ( bs - pagehdr::NUMERIC )

+1 )

, 0 )

as expected

FROM index_item_sizes

),

raw_bloat AS (

SELECT current_database() as dbname, nspname, pg_class.relname AS table_name, index_name,

bs*(index_aligned_est.relpages)::bigint AS totalbytes, expected,

CASE

WHEN index_aligned_est.relpages <= expected

THEN 0

ELSE bs*(index_aligned_est.relpages-expected)::bigint

END AS wastedbytes,

CASE

WHEN index_aligned_est.relpages <= expected

THEN 0

ELSE bs*(index_aligned_est.relpages-expected)::bigint * 100 / (bs*(index_aligned_est.relpages)::bigint)

END AS realbloat,

pg_relation_size(index_aligned_est.table_oid) as table_bytes,

stat.idx_scan as index_scans

FROM index_aligned_est

JOIN pg_class ON pg_class.oid=index_aligned_est.table_oid

JOIN pg_stat_user_indexes AS stat ON index_aligned_est.index_oid = stat.indexrelid

),

format_bloat AS (

SELECT dbname as database_name, nspname as schema_name, table_name, index_name,

round(realbloat) as bloat_pct, round(wastedbytes/(1024^2)::NUMERIC) as bloat_mb,

round(totalbytes/(1024^2)::NUMERIC,3) as index_mb,

round(table_bytes/(1024^2)::NUMERIC,3) as table_mb,

index_scans

FROM raw_bloat

)

-- final query outputting the bloated indexes

-- change the where and order by to change

-- what shows up as bloated

SELECT *

FROM format_bloat

WHERE ( bloat_pct > 50 and bloat_mb > 10 )

ORDER BY bloat_mb DESC;

Task 11: Monitor blocked and blocking activities in PostgreSQL:

SELECT blocked_locks.pid AS blocked_pid,

blocked_activity.usename AS blocked_user,

blocking_locks.pid AS blocking_pid,

blocking_activity.usename AS blocking_user,

blocked_activity.query AS blocked_statement,

blocking_activity.query AS current_statement_in_blocking_process

FROM pg_catalog.pg_locks blocked_locks

JOIN pg_catalog.pg_stat_activity blocked_activity ON blocked_activity.pid = blocked_locks.pid

JOIN pg_catalog.pg_locks blocking_locks

ON blocking_locks.locktype = blocked_locks.locktype

AND blocking_locks.database IS NOT DISTINCT FROM blocked_locks.database

AND blocking_locks.relation IS NOT DISTINCT FROM blocked_locks.relation

AND blocking_locks.page IS NOT DISTINCT FROM blocked_locks.page

AND blocking_locks.tuple IS NOT DISTINCT FROM blocked_locks.tuple

AND blocking_locks.virtualxid IS NOT DISTINCT FROM blocked_locks.virtualxid

AND blocking_locks.transactionid IS NOT DISTINCT FROM blocked_locks.transactionid

AND blocking_locks.classid IS NOT DISTINCT FROM blocked_locks.classid

AND blocking_locks.objid IS NOT DISTINCT FROM blocked_locks.objid

AND blocking_locks.objsubid IS NOT DISTINCT FROM blocked_locks.objsubid

AND blocking_locks.pid != blocked_locks.pid

JOIN pg_catalog.pg_stat_activity blocking_activity ON blocking_activity.pid = blocking_locks.pid

WHERE NOT blocked_locks.granted;

Task 12: Monitoring PostgreSQL Disk I/O performance

-- perform a "select pg_stat_reset();" when you want to reset counter statistics

with

all_tables as

(

SELECT *

FROM (

SELECT 'all'::text as table_name,

sum( (coalesce(heap_blks_read,0) + coalesce(idx_blks_read,0) + coalesce(toast_blks_read,0) + coalesce(tidx_blks_read,0)) ) as from_disk,

sum( (coalesce(heap_blks_hit,0) + coalesce(idx_blks_hit,0) + coalesce(toast_blks_hit,0) + coalesce(tidx_blks_hit,0)) ) as from_cache

FROM pg_statio_all_tables --> change to pg_statio_USER_tables if you want to check only user tables (excluding postgres's own tables)

) a

WHERE (from_disk + from_cache) > 0 -- discard tables without hits

),

tables as

(

SELECT *

FROM (

SELECT relname as table_name,

( (coalesce(heap_blks_read,0) + coalesce(idx_blks_read,0) + coalesce(toast_blks_read,0) + coalesce(tidx_blks_read,0)) ) as from_disk,

( (coalesce(heap_blks_hit,0) + coalesce(idx_blks_hit,0) + coalesce(toast_blks_hit,0) + coalesce(tidx_blks_hit,0)) ) as from_cache

FROM pg_statio_all_tables --> change to pg_statio_USER_tables if you want to check only user tables (excluding postgres's own tables)

) a

WHERE (from_disk + from_cache) > 0 -- discard tables without hits

)

SELECT table_name as "table name",

from_disk as "disk hits",

round((from_disk::numeric / (from_disk + from_cache)::numeric)*100.0,2) as "% disk hits",

round((from_cache::numeric / (from_disk + from_cache)::numeric)*100.0,2) as "% cache hits",

(from_disk + from_cache) as "total hits"

FROM (SELECT * FROM all_tables UNION ALL SELECT * FROM tables) a

ORDER BY (case when table_name = 'all' then 0 else 1 end), from_disk desc

References

- https://www.postgresql.org/developer/related-projects/

- https://www.postgresql.org/community/

- https://github.com/pgexperts

☛ MinervaDB is trusted by top companies worldwide

The post PostgreSQL DBA Daily Checklist appeared first on The WebScale Database Infrastructure Operations Experts.

]]>The post Troubleshooting PostgreSQL Performance from Slow Queries appeared first on The WebScale Database Infrastructure Operations Experts.

]]>Introduction

If you are doing a very detailed Performance Diagnostics / Forensics then we strongly recommend you to understand the Data Access Path of underlying queries, cost of query execution, wait events / locks and system resource usage by PostgreSQL infrastructure operations. MinervaDB Performance Engineering Team measures performance by “Response Time” , So finding slow queries in PostgreSQL will be the most appropriate point to start this blog. PostgreSQL Server is highly configurable to collect details on query performance: slow query log, auditing execution plans with auto_explain and querying pg_stat_statements .

Using PostgreSQL slow query log to troubleshoot the performance

Step 1 – Open postgresql.conf file in your favorite text editor ( In Ubuntu, postgreaql.conf is available on /etc/postgresql/ ) and update configuration parameter log_min_duration_statement , By default configuration the slow query log is not active, To enable the slow query log on globally, you can change postgresql.conf:

log_min_duration_statement = 2000

In the above configuration, PostgreSQL will log queries, which take longer than 2 seconds.

Step 2 – A “reload” (by simply calling the SQL function) is sufficient, there is no need for a PostgreSQL server restart and Don’t worry, it won’t interrupt any active connections:

postgres=# SELECT pg_reload_conf(); pg_reload_conf ---------------- t (1 row)

Note: It’s often too heavy for PostgreSQL infrastructure if you change slow query log settings in postgresql.conf , Therefore it makes more sensible to change only for a selected database or user:

postgres=# ALTER DATABASE minervadb SET log_min_duration_statement = 2000; ALTER DATABASE

To complete the detailed performance forensics / diagnostics of high latency queries you can use aut0_explain , We have explained same below for queries exceeding certain threshold in PostgreSQL to send plan to the log file:

postgres=# LOAD 'auto_explain'; LOAD postgres=# SET auto_explain.log_analyze TO on; SET postgres=# SET auto_explain.log_min_duration TO 2000; SET

You can also enable auto explain in postgresql.conf with the settings below:

session_preload_libraries = 'auto_explain';

Note: Please do not forget to call pg_reload_conf() after the change made to postgresql.conf

More examples on PostgreSQL auto explain is copied below:

postgres=# CREATE TABLE minervdb_bench AS

postgres-# SELECT * FROM generate_series(1, 10000000) AS id;

SELECT 10000000

postgres=# CREATE INDEX idx_id ON minervadb_bench(id);

postgres=# CREATE INDEX idx_id ON minervdb_bench(id);

CREATE INDEX

postgres=# ANALYZE;

ANALYZE

postgres=# LOAD 'auto_explain';

LOAD

postgres=# SET auto_explain.log_analyze TO on;

SET

postgres=# SET auto_explain.log_min_duration TO 200;

SET

postgres=# explain SELECT * FROM minervdb_bench WHERE id < 5000;

QUERY PLAN

---------------------------------------------------------------------------------------

Index Only Scan using idx_id on minervdb_bench (cost=0.43..159.25 rows=4732 width=4)

Index Cond: (id < 5000)

(2 rows)

postgres=# explain SELECT * FROM minervdb_bench WHERE id < 200000;

QUERY PLAN

------------------------------------------------------------------------------------------

Index Only Scan using idx_id on minervdb_bench (cost=0.43..6550.25 rows=198961 width=4)

Index Cond: (id < 200000)

(2 rows)

postgres=# explain SELECT count(*) FROM minervdb_bench GROUP BY id % 2;

QUERY PLAN

-------------------------------------------------------------------------------------

GroupAggregate (cost=1605360.71..1805360.25 rows=9999977 width=12)

Group Key: ((id % 2))

-> Sort (cost=1605360.71..1630360.65 rows=9999977 width=4)

Sort Key: ((id % 2))

-> Seq Scan on minervdb_bench (cost=0.00..169247.71 rows=9999977 width=4)

JIT:

Functions: 6

Options: Inlining true, Optimization true, Expressions true, Deforming true

(8 rows)

Using pg_stat_statements

We can use pg_stat_statements to group the identical PostgreSQL queries by latency, To enable pg_stat_statements you have to add the following line to postgresql.conf and restart PostgreSQL server:

# postgresql.conf shared_preload_libraries = 'pg_stat_statements' pg_stat_statements.max = 10000 pg_stat_statements.track = all

Run “CREATE EXTENSION pg_stat_statements” in your database so that PostgreSQL will create a view for you:

postgres=# CREATE EXTENSION pg_stat_statements;

CREATE EXTENSION

postgres=# \d pg_stat_statements

View "public.pg_stat_statements"

Column | Type | Collation | Nullable | Default

---------------------+------------------+-----------+----------+---------

userid | oid | | |

dbid | oid | | |

queryid | bigint | | |

query | text | | |

calls | bigint | | |

total_time | double precision | | |

min_time | double precision | | |

max_time | double precision | | |

mean_time | double precision | | |

stddev_time | double precision | | |

rows | bigint | | |

shared_blks_hit | bigint | | |

shared_blks_read | bigint | | |

shared_blks_dirtied | bigint | | |

shared_blks_written | bigint | | |

local_blks_hit | bigint | | |

local_blks_read | bigint | | |

local_blks_dirtied | bigint | | |

local_blks_written | bigint | | |

temp_blks_read | bigint | | |

temp_blks_written | bigint | | |

blk_read_time | double precision | | |

blk_write_time | double precision | | |

postgres=#

pg_stat_statements view columns explained (Source: https://www.postgresql.org/docs/12/pgstatstatements.html)

| Name | Type | References | Description |

|---|---|---|---|

| userid | oid | pg_authid.oid | OID of user who executed the statement |

| dbid | oid | pg_database.oid | OID of database in which the statement was executed |

| queryid | bigint | Internal hash code, computed from the statement’s parse tree | |

| query | text | Text of a representative statement | |

| calls | bigint | Number of times executed | |

| total_time | double precision | Total time spent in the statement, in milliseconds | |

| min_time | double precision | Minimum time spent in the statement, in milliseconds | |

| max_time | double precision | Maximum time spent in the statement, in milliseconds | |

| mean_time | double precision | Mean time spent in the statement, in milliseconds | |

| stddev_time | double precision | Population standard deviation of time spent in the statement, in milliseconds | |

| rows | bigint | Total number of rows retrieved or affected by the statement | |

| shared_blks_hit | bigint | Total number of shared block cache hits by the statement | |

| shared_blks_read | bigint | Total number of shared blocks read by the statement | |

| shared_blks_dirtied | bigint | Total number of shared blocks dirtied by the statement | |

| shared_blks_written | bigint | Total number of shared blocks written by the statement | |

| local_blks_hit | bigint | Total number of local block cache hits by the statement | |

| local_blks_read | bigint | Total number of local blocks read by the statement | |

| local_blks_dirtied | bigint | Total number of local blocks dirtied by the statement | |

| local_blks_written | bigint | Total number of local blocks written by the statement | |

| temp_blks_read | bigint | Total number of temp blocks read by the statement | |

| temp_blks_written | bigint | Total number of temp blocks written by the statement | |

| blk_read_time | double precision | Total time the statement spent reading blocks, in milliseconds (if track_io_timing is enabled, otherwise zero) | |

| blk_write_time | double precision | Total time the statement spent writing blocks, in milliseconds (if track_io_timing is enabled, otherwise zero) |

You can list queries by latency / Response Time in PostgreSQL by querying pg_stat_statements:

postgres=# \x

Expanded display is on.

select query,calls,total_time,min_time,max_time,mean_time,stddev_time,rows from pg_stat_statements order by mean_time desc;

-[ RECORD 1 ]---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

query | SELECT count(*) FROM minervdb_bench GROUP BY id % $1

calls | 6

total_time | 33010.533078

min_time | 4197.876021

max_time | 6485.33594

mean_time | 5501.755512999999

stddev_time | 826.3716429081501

rows | 72

-[ RECORD 2 ]---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

query | CREATE INDEX idx_id ON minervdb_bench(id)

calls | 1

total_time | 4560.808456

min_time | 4560.808456

max_time | 4560.808456

mean_time | 4560.808456

stddev_time | 0

rows | 0

-[ RECORD 3 ]---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

query | ANALYZE

calls | 1

total_time | 441.725223

min_time | 441.725223

max_time | 441.725223

mean_time | 441.725223

stddev_time | 0

rows | 0

-[ RECORD 4 ]--------------------------------------------------------------------------------------------------------------------------------------------------------------------------

------------------------------------------------------------------------------------------------------------------------------------------------

query | SELECT a.attname,

+

| pg_catalog.format_type(a.atttypid, a.atttypmod),

+

| (SELECT substring(pg_catalog.pg_get_expr(d.adbin, d.adrelid, $1) for $2) +

| FROM pg_catalog.pg_attrdef d +

| WHERE d.adrelid = a.attrelid AND d.adnum = a.attnum AND a.atthasdef), +

| a.attnotnull, +

| (SELECT c.collname FROM pg_catalog.pg_collation c, pg_catalog.pg_type t +

| WHERE c.oid = a.attcollation AND t.oid = a.atttypid AND a.attcollation <> t.typcollation) AS attcollation, +

| a.attidentity, +

| a.attgenerated +

| FROM pg_catalog.pg_attribute a +

| WHERE a.attrelid = $3 AND a.attnum > $4 AND NOT a.attisdropped +

| ORDER BY a.attnum

calls | 4

total_time | 1.053107

min_time | 0.081565

max_time | 0.721785

mean_time | 0.26327675000000006

stddev_time | 0.2658756938743884

rows | 86

If you already know the epicenter of the bottleneck is a particular query or event / time, you can reset statistics just before query / event to monitor the problematic components in the PostgreSQL performance, You can do that by just calling pg_stat_statements_reset() as copied below:

postgres= SELECT pg_stat_statements_reset();

Conclusion

Performance tuning is the process of optimizing PostgreSQL performance by streamlining the execution of multiple SQL statements. In other words, performance tuning simplifies the process of accessing and altering information contained by the database with the intention of improving query response times and database application operations.

The post Troubleshooting PostgreSQL Performance from Slow Queries appeared first on The WebScale Database Infrastructure Operations Experts.

]]>The post MinervaDB Webinar: PostgreSQL Internals and Performance Optimization appeared first on The WebScale Database Infrastructure Operations Experts.

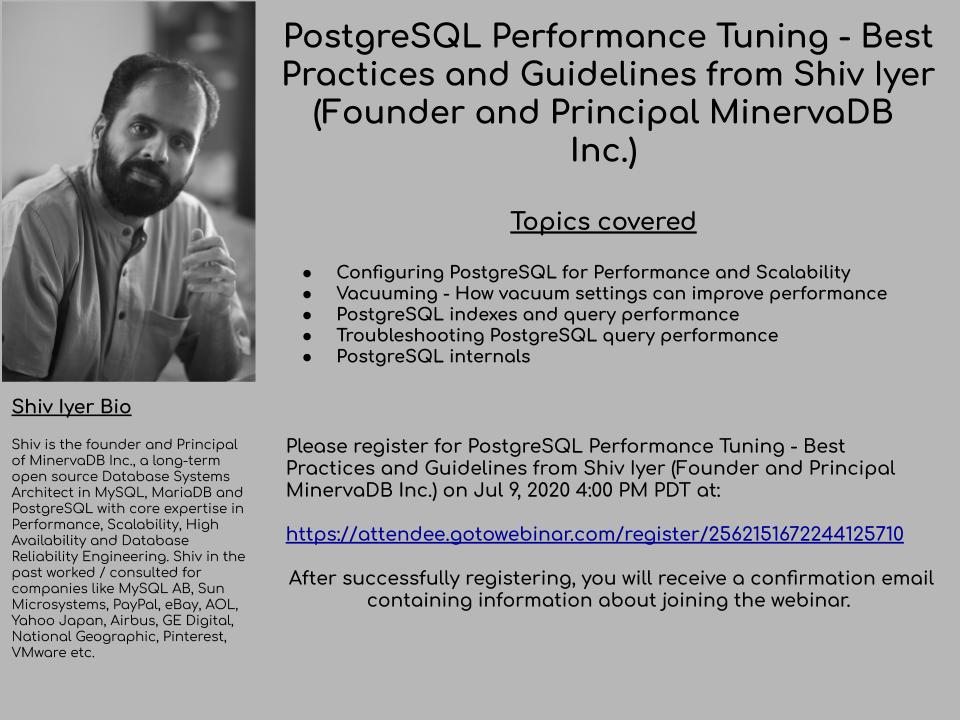

]]>Our founder and Principal Shiv Iyer did a webinar (July 09, 2020) on PostgreSQL Internals and Performance Optimization , Shiv is a longtime Open Source Database Systems Operations expert with core expertise on performance optimization, capacity planning / sizing, architecture / internals, transaction processing engineering, horizontal scalability & partitioning, storage optimization, distributed database systems and data compression algorithms. The core objective of this webinar was to talk about PostgreSQL internals, troubleshooting PostgreSQL query performance, index optimization, partitioning, PostgreSQL configuration parameters and best practices. We strongly believe that understanding PostgreSQL architecture and internals are very important to troubleshoot PostgreSQL performance proactively and efficiently, You can download the PDF copy of the webinar here , If you want the recorded video of the webinar please contact support@minervadb.com .

Contact MinervaDB for Enterprise-Class PostgreSQL Consulting and 24*7 Consultative Support

The post MinervaDB Webinar: PostgreSQL Internals and Performance Optimization appeared first on The WebScale Database Infrastructure Operations Experts.

]]>The post PostgreSQL Internals and Performance Troubleshooting Webinar appeared first on The WebScale Database Infrastructure Operations Experts.

]]>MinervaDB provides full-stack PostgreSQL consulting, support and managed Remote DBA Services for several customers globally addressing performance, scalability, high availability and database reliability engineering. Our prospective customers and other fellow DBAs are often curious about how we at MinervaDB troubleshoot PostgreSQL performance, So we thought will share the same through a webinar on “PostgreSQL Internals and Performance Troubleshooting“. This webinar is hosted by Shiv Iyer ( Founder and Principal of MinervaDB ), a longtime Open Source Database Systems Operations expert with core expertise on performance optimization, capacity planning / sizing, architecture / internals, transaction processing engineering, horizontal scalability & partitioning, storage optimization, distributed database systems and data compression algorithms. You can register for the webinar here

Contact MinervaDB for Enterprise-Class PostgreSQL Consulting and 24*7 Consultative Support

The post PostgreSQL Internals and Performance Troubleshooting Webinar appeared first on The WebScale Database Infrastructure Operations Experts.

]]>The post How PostgreSQL Autovacuum works ? appeared first on The WebScale Database Infrastructure Operations Experts.

]]>What is VACUUM in PostgreSQL ?

In PostgreSQL, whenever rows in a table deleted, The existing row or tuple is marked as dead ( will not be physically removed) and during an update, it marks corresponding exiting tuple as dead and inserts a new tuple so in PostgreSQL UPDATE operations = DELETE + INSERT. These dead tuples consumes unnecessary storage and eventually you have a bloated PostgreSQL database, This is a serious issue to solve for a PostgreSQL DBA. VACUUM reclaims in the storage occupied by dead tuples, Please keep this noted that reclaimed storage space is never given back to the resident operating system rather they are just defragmented within the same database page and so storage for reuse to future data inserts in the same table. Does the pain stops here ? No, It doesn’t. Bloat seriously affect the PostgreSQL query performance, In PostgreSQL tables and indexes are stored as array of fixed-size pages ( usually 8KB in size). Whenever a query requests for rows, the PostgreSQL instance loads these pages into the memory and dead rows causes expensive disk I/O during data loading.

Blame it on PostgreSQL Multi-Version Concurrency Control (MVCC) for bloating. Because, Multi-Version Concurrency Control (MVCC) in PostgreSQL is committed to maintain each transaction isolated and durable ( ACID compliance in transaction management), readers never block writers and vice versa. Every transaction ( such as an insert, update or delete, as well as explicitely wrapping a group of statements together via BEGIN – COMMIT.) in PostgreSQL is identified by a transaction ID called XID. When a transaction starts, Postgres increments an XID and assigns it to the current transaction. PostgresSQL also stores transaction information on every row in the system, which is used to determine whether a row is visible to the transaction or not. Because different transactions will have visibility to a different set of rows, PostgreSQL needs to maintain potentially obsolete records. This is why an UPDATE actually creates a new row and why DELETE doesn’t really remove the row: it merely marks it as deleted and sets the XID values appropriately. As transactions complete, there will be rows in the database that cannot possibly be visible to any future transactions. These are called dead rows ( technically bloated records in PostgreSQL).

How PostgreSQL database handles bloating for optimal performance and storage efficiency ?

In the past PostgreSQL DBAs ( pre PostgreSQL 8 ) used to reclaim the storage from dead tuples by running VACUUM command manually, This was most daunting task to do because DBAs need to to balance the resource utilization for vacuuming against the current transaction volume / load to plan when to do it, and also when to abort it. PostgreSQL “autovacuum” feature simplified DBA life much better on managing database bloating and vacuum.

How Autovacuum works in PostgreSQL ?

Autovacuum is one of the background utility processes that starts automatically when the actual number of dead tuples in a table exceeds an effective threshold, due to updates and deletes, The frequency of this process is controlled by PostgreSQL configuration parameter autovacuum_naptime (default is 1 minute) and autovacuum attempts to start a new worker process every time when vacuuming process begins, this completely depends on the value of configuration parameter autovacuum_max_workers (default 3). The worker searches for tables where PostgreSQL’s statistics records indicate a large enough number of rows have changed relative to the table size. The formula is:

## The formula which is applied by autovacuum process to identify tables which are bloated and need immediate attention for vacuuming: [estimated rows invalidated] ≥ autovacuum_vacuum_scale_factor * [estimated table size] + autovacuum_vacuum_threshold

This is what happens internally with-in PostgreSQL during autovacuum process:

The worker threads generated will start removing dead tuples and compacting pages aggressively but these entire activities consumes intense disk I/O throughput, Autovacuum records these I/O credits and when it exceeds autovacuum_vacuum_cost_limit then autovacuum pauses all workers for few milliseconds depending on the value of configuration parameter autovacuum_vacuum_cost_delay (default is 20 milliseconds). The vacuuming as mentioned above is an resource hogging and time consuming activity because every vacuum worker scan through individual dead rows to remove index entries pointed on those rows before compaction of pages, If you have deployed PostgreSQL on a limited memory / RAM infrastructure then maintenance_work_mem configuration parameter will be very conservative and this leaves worker thread to process only limited dead rows during each attempt making vacuum fall behind.

How to configure Autovacuum parameters ?

The default autovacuum works great for few GBs PostgreSQL Database and is definitely not recommended for larger PostgreSQL infrastructure as with increasing data / transactions volume the vacuum will fall behind. Once vacuum has fallen behind, It will directly impact query execution plan and performance, This will direct DBAs to either run autovacuum less frequently or not at all. The following matrix recommends optimal sizing of configuration parameters for larger PostgreSQL database instances:

| PostgreSQL Autovacuum configuration parameter | How to tune PostgreSQL Autovacuum configuration parameter for Performance and Reliability |

|---|---|

| autovacuum (boolean) | This configuration parameter decides whether your PostgreSQL server should run the autovacuum launcher daemon process. Technically you can never disable autovacuum because even when this parameter is disabled, the system will launch autovacuum processes if necessary to prevent transaction ID wraparound. P.S.- You have to enable track_counts for autovacuum to work. |

| log_autovacuum_min_duration (integer) | To track autovacuum activity you have to enable this parameter |

| autovacuum_max_workers (integer) | The parameter specifies the maximum number of autovacuum processes (other than the autovacuum launcher) that may be running at any one time. The default is three and we recommend 6 to 8 for PostgreSQL performance |

| autovacuum_naptime (integer) | This parameter specifies the minimum delay between autovacuum runs on any given database. The delay is measured in seconds, and the default is one minute (1min), We recommend to leave this parameter untouched even when you have very large PostgreSQL tables with DELETEs and UPDATEs. |

| autovacuum_vacuum_threshold (integer) | This parameter specifies the minimum number of updated or deleted tuples needed to trigger a VACUUM in any one table. The default is 50 tuples. This parameter could be larger when you have smaller tables. |

| autovacuum_analyze_threshold (integer) | Specifies the minimum number of inserted, updated or deleted tuples needed to trigger an ANALYZE in any one table. The default is 50 tuples. This parameter could be larger when you have smaller tables. |

| autovacuum_vacuum_scale_factor (floating point) | This parameter specifies a fraction of the table size to add to autovacuum_vacuum_threshold when deciding whether to trigger a VACUUM. The default is 0.2 (20% of table size). If you have larger PostgreSQL tables we recommend smaller values (0.01) |

| autovacuum_analyze_scale_factor (floating point) | This parameter specifies a fraction of the table size to add to autovacuum_analyze_threshold when deciding whether to trigger an ANALYZE. The default is 0.1 (10% of table size). If you have larger PostgreSQL tables we recommend smaller values (0.01). |

| autovacuum_freeze_max_age (integer) | This parameter specifies the maximum age (in transactions) that a table's pg_class.relfrozenxid field can attain before a VACUUM operation is forced to prevent transaction ID wraparound within the table. P.S. - PostgreSQL will launch autovacuum processes to prevent wraparound even when autovacuum is otherwise disabled. |

| autovacuum_multixact_freeze_max_age (integer) | This parameter specifies the maximum age (in multixacts) that a table's pg_class.relminmxid field can attain before a VACUUM operation is forced to prevent multixact ID wraparound within the table. P.S. - PostgreSQL will launch autovacuum processes to prevent wraparound even when autovacuum is otherwise disabled. |

| autovacuum_vacuum_cost_delay (integer) | This parameter specifies the cost delay value that will be used in automatic VACUUM operations. If -1 is specified, the regular vacuum_cost_delay value will be used. The default value is 20 milliseconds. P.S. - The default value works even when you have very large PostgreSQL database infrastructure. |

| autovacuum_vacuum_cost_limit (integer) | Specifies the cost limit value that will be used in automatic VACUUM operations. If -1 is specified (which is the default), the regular vacuum_cost_limit value will be used. The default value is 20 milliseconds. P.S. - The default value works even when you have very large PostgreSQL database infrastructure. |

Interesting links for extra reading

- https://www.citusdata.com/blog/2016/11/04/autovacuum-not-the-enemy/

- https://www.postgresql.org/docs/11/sql-vacuum.html

- https://devcenter.heroku.com/articles/postgresql-concurrency

- https://github.com/pgexperts/pgx_scripts/tree/master/bloat

☛ MinervaDB contacts – Sales & General Inquiries

| Business Function | Contact |

|---|---|

CONTACT GLOBAL SALES (24*7) CONTACT GLOBAL SALES (24*7) |  (844) 588-7287 (USA) (844) 588-7287 (USA) (415) 212-6625 (USA) (415) 212-6625 (USA) (778) 770-5251 (Canada) (778) 770-5251 (Canada) |

TOLL FREE PHONE (24*7) TOLL FREE PHONE (24*7) |  (844) 588-7287 (844) 588-7287 |

MINERVADB FAX MINERVADB FAX | +1 (209) 314-2364 |

MinervaDB Email - General / Sales / Consulting MinervaDB Email - General / Sales / Consulting | contact@minervadb.com |

MinervaDB Email - Support MinervaDB Email - Support | support@minervadb.com |

MinervaDB Email -Remote DBA MinervaDB Email -Remote DBA | remotedba@minervadb.com |

Shiv Iyer Email - Founder and Principal Shiv Iyer Email - Founder and Principal | shiv@minervadb.com |

CORPORATE ADDRESS: CALIFORNIA CORPORATE ADDRESS: CALIFORNIA | MinervaDB Inc., 340 S LEMON AVE #9718 WALNUT 91789 CA, US |

CORPORATE ADDRESS: DELAWARE CORPORATE ADDRESS: DELAWARE | MinervaDB Inc., PO Box 2093 PHILADELPHIA PIKE #3339 CLAYMONT, DE 19703 |

CORPORATE ADDRESS: HOUSTON CORPORATE ADDRESS: HOUSTON | MinervaDB Inc., 1321 Upland Dr. PMB 19322, Houston, TX 77043, US |

The post How PostgreSQL Autovacuum works ? appeared first on The WebScale Database Infrastructure Operations Experts.

]]>The post How PostgreSQL 11 made adding new table columns with default values faster ? appeared first on The WebScale Database Infrastructure Operations Experts.

]]>Before PostgreSQL 11 adding anew table column with a non-null default value results in a rewrite of the entire table, This works fine for a smaller data sets but the whole thing goes super complicated and expensive with high volume databases because of ACCESS EXCLUSIVE LOCK ( default lock mode for LOCK TABLE statements that do not specify a mode explicitly ) on the table which conflicts with locks of all modes (ACCESS SHARE, ROW SHARE, ROW EXCLUSIVE, SHARE UPDATE EXCLUSIVE, SHARE, SHARE ROW EXCLUSIVE, EXCLUSIVE, and ACCESS EXCLUSIVE) and guarantees the holder of the lock is the only transaction accessing the table in any way, and it’ll block every other operation until it’s released; even simple SELECT statements have to wait, This is unacceptable for a continuously 24*7 accessed table and is a serious performance bottleneck. PostgreSQL 11 has addressed this problem gracefully by storing default value in the catalog and ushered whenever needed in rows exiting at the time the change was made and for new rows / new versions of existing rows are written with default value in place. The rows which existed before this change was made with NULL values uses the value stored in the catalog when the row is fetched. This makes adding new table columns with default values faster and even smarter. To conclude, The default value doesn’t have to be a static expression, It can be even non-volatile expressions like CURRENT_TIMESTAMP but volatile expressions such as random(), currval(), timeofday() will still result in table rewrites.

If you want MinervaDB PostgreSQL consultants to help you in PostgreSQL Performance Optimization and Tuning, Please book for an no obligation PostgreSQL consulting below:

The post How PostgreSQL 11 made adding new table columns with default values faster ? appeared first on The WebScale Database Infrastructure Operations Experts.

]]>The post Top Three Most Compelling New Features From PostgreSQL 12 appeared first on The WebScale Database Infrastructure Operations Experts.

]]>PostgreSQL 12 provides significant performance and maintenance enhancements to its indexing system and to partitioning. PostgreSQL 12 introduces the ability to run queries over JSON documents using JSON path expressions defined in the SQL/JSON standard. Such queries may utilize the existing indexing mechanisms for documents stored in the JSONB format to efficiently retrieve data. PostgreSQL 12 extends its support of ICU collations by allowing users to define “nondeterministic collations” that can, for example, allow case-insensitive or accent-insensitive comparisons. PostgreSQL 12 enhancements include notable improvements to query performance, particularly over larger data sets, and overall space utilization. This release provides application developers with new capabilities such as SQL/JSON path expression support, optimizations for how common table expression (WITH) queries are executed, and generated columns

The following are top three most interesting features introduced in PostgreSQL 12 :

1. Much better indexing for performance and optimal space management in PostgreSQL 12 – Why we worry so much about indexing in Database Systems ? All of us know very well that large amount data Can’t technically fit well in the main memory. When you have more number of keys, You will eventually end-up reading more from disk compared to main memory and Disk access time is very high compared to main memory access time. We use B-tree indexes to reduce the number of disk accesses. B-tree is a data structure that store data in its node in sorted order. B-tree stores data in a way that each node accommodate keys in ascending order. B-tree uses an array of entries for a single node and having reference to child node for each of these entries. We spend significant amount of time to reclaim the storage occupied by dead tuples and this happen due to PostgreSQL indexes bloat, which take up extra storage in the disk. Thanks to PostgreSQL 12, We have now much better B-tree indexing which can reduce up to 40% in space utilization and overall gain in the query performance and that means we have now both faster WRITEs and READs. PostgreSQL 12 introduces the ability to rebuild indexes without blocking writes to an index via the REINDEX CONCURRENTLY command, allowing users to avoid downtime scenarios for lengthy index rebuilds.

2. ALTER TABLE ATTACH PARTITION without blocking queries – In PostgreSQL, Every lock has queue. If transaction T2 tries to acquire a lock that is already held by transaction T1 with a conflicting lock level, then transaction T1 will wait in the lock queue. Now something interesting happens: if another transaction T3 comes in, then it will not only have to check for conflict with T1, but also with transaction T2, and any other transaction in the lock queue. So even if your DDL command can run very quickly, it might be in a queue for a long time waiting for queries to finish, and queries that start after it will be blocked behind it. PostgreSQL support partitioning, The partitioning is about splitting logically one large table into several pieces. Partitioning improves query performance. The PostgreSQL partitioning substitutes for leading columns of indexes, reducing index size and making it more likely that the heavily-used parts of the indexes fit in memory. Till PostgreSQL 11, During INSERTs into a partitioned table, the every partition of respective table was locked and it doesn’t even matter if it received a new record or not, At a large data operations scale with larger number of partitions this could become a serious bottleneck. Starting from PostgreSQL 12, When we are inserting a row, only the related partition will be locked. This results in much better performance at higher partition counts, especially when inserting just 1 row at a time.

3. JSON Path support in Postgres 12 – The JSON data-type was introduced in PostgreSQL-9.2 and from there PostgreSQL commitment to JSON data management has increased significantly. The SQL:2016 standard introduced JSON and various ways to query JSON values, The major addition came-up in PostgreSQL-9.4 with the addition of JSONB data-type. JSONB is an advanced version of JSON data-type which stores the JSON data in binary format. PostgreSQL 12 support JSON Path, The JSON Path in PostgreSQL is implemented as jsonpath data type, which is actually the binary representation of parsed SQL/JSON path expression. The main task of the path language is to specify the parts (the projection) of JSON data to be retrieved by path engine for the SQL/JSON query functions. PostgreSQL 12 introduces the ability to run queries over JSON documents using JSON path expressions defined in the SQL/JSON standard. Such queries may utilize the existing indexing mechanisms for documents stored in the JSONB format to efficiently retrieve data.

References

- Gentle Guide to JSONPATH in PostgreSQL – https://github.com/obartunov/sqljsondoc/blob/master/jsonpath.md

- PostgreSQL release notes – https://www.postgresql.org/about/news/1976/

Book for an no obligation consulting with MinervaDB PostgreSQL Team

The post Top Three Most Compelling New Features From PostgreSQL 12 appeared first on The WebScale Database Infrastructure Operations Experts.

]]>